There’s a couple ways you can bodge something together to get a usable interface for clipping … I won’t talk about the TTS portion since I haven’t done any of that before.

Method 1: Share to Tasker → Task Saves to Vault

- Tasker: AutoShare to handle the Android “Share” dialog.

- Android Share dialog launches a custom Tasker Input dialog and you then handle the file saving to the vault.

Method 2: Share to Tasker → Task Redirect to Vault Directly via URI Handler, this would launch the Create Note dialog with data input.

`Task: S::Obsidian-NewNote

<The Vault>

A1: [X] Variable Set [

Name: %obsidian_vault_name

To: "Notes.Main Vault"

Structure Output (JSON, etc): On ]

<The Vault>

A2: Variable Set [

Name: %obsidian_vault_name

To: Lightweight.Temp

Structure Output (JSON, etc): On ]

<The Title : Leave blank for default.>

A3: Variable Set [

Name: %obsidian_note_title

To: Untitled

Structure Output (JSON, etc): On ]

<FrontMatter: type>

A4: Variable Set [

Name: %obsidian_note_type

To: link

Structure Output (JSON, etc): On ]

<The Content : Leave blank for default.>

A5: Variable Set [

Name: %obsidian_note_content

To: ---%0Atype%3A%20%obsidian_note_type%0A---%0A%0A%0A%0A%23link%20%23url%20%23from-android

Structure Output (JSON, etc): On ]

<New Note X-Callback URI>

A6: Variable Set [

Name: %obsidian_call_uri

To: obsidian://new?vault=%obsidian_vault_name&name=%obsidian_note_title&content=%obsidian_note_content

Structure Output (JSON, etc): On ]

<DEBUG: New Note X-Callback URI>

A7: [X] Variable Set [

Name: %Obsidian_call_uri

To: obsidian://new?vault=%obsidian_vault_name&name=%obsidian_note_title&content=%obsidian_note_content

Structure Output (JSON, etc): On ]

<Debug Output>

A8: [X] Flash [

Text: %obsidian_call_uri

Continue Task Immediately: On

Dismiss On Click: On ]

<The Call on the app>

A9: Browse URL [

URL: %obsidian_call_uri

Package/App Name: Obsidian ]

A10: Stop [ ]

`

NOTE: This works for Android 15, but has been hit or miss on Android 16 Beta 1. I haven’t looked into why that is yet.

What I do is use a very lightweight “Vault” of Obsidian that launches near instantly (more below), then I have a FolderSync that runs nightly that moves (not copies) those “notes” over to my main vault. Doing it this obscure way allows me to get Obsidian open very quickly for the purpose of quick note taking / clipping … it launches in about 1 sec or less, compared with my main vault that launches in about 8 seconds.

I have most plugins disabled on this lightweight vault, default theme, and a lot of the internal core plugins disabled (e.g. graph, canvas, back links, etc.).

See some more here: Obsidian URI - Obsidian Help

You’ll notice there’s also params for appending, clipboard, silent (process, but don’t open the vault/app), appending, and an assortment of others. For a few of these, the docs don’t say, but I believe something like append would be &append=true (same for clipboard, silent).

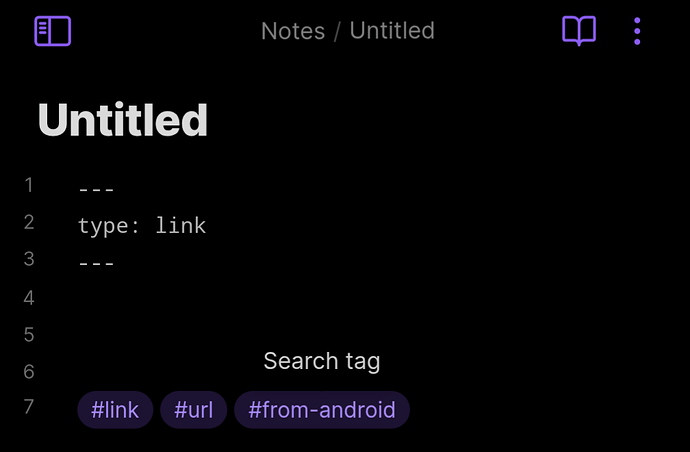

The Tasker task above has some example content that creates this: