Script:

import re

import os

import platform

import openai

import logging

from typing import List, Optional, Tuple

# Example usage (Gemini also has section support with regex, of course)

# @groq_chat_with_files 'Filename' `start[\s\S]*?end` Tell me about this section

# @gemini_chat_with_files 'Filename' Tell me about this file

# Path configuration - MODIFY THESE TO YOUR PATHS

WINDOWS_BASE_PATH = r"C:\path\to\your\obsidian\vault"

LINUX_BASE_PATH = "/path/to/your/obsidian/vault"

MACOS_BASE_PATH = "/Users/yourusername/path/to/your/obsidian/vault"

# Determine base path based on operating system

if platform.system() == "Linux":

BASE_PATH = LINUX_BASE_PATH

elif platform.system() == "Darwin": # macOS

BASE_PATH = MACOS_BASE_PATH

else: # Windows or other

BASE_PATH = WINDOWS_BASE_PATH

# Add the default system instruction

DEFAULT_SYSTEM_INSTRUCTION = """Use markdown but do NOT apply markdown code blocks."""

# Initialize global variables

__chat_messages = []

# API Keys - REPLACE WITH YOUR OWN KEYS

GROQ_API_KEY = "your_groq_api_key_here"

GEMINI_API_KEY = "your_gemini_api_key_here"

def create_groq_client():

"""Create and return a configured OpenAI client for Groq."""

return openai.OpenAI(

api_key=GROQ_API_KEY,

base_url="https://api.groq.com/openai/v1"

)

def create_gemini_client(model_name: str):

"""Create and return a configured Gemini client."""

try:

import google.generativeai as genai

genai.configure(api_key=GEMINI_API_KEY)

return genai.GenerativeModel(

model_name=model_name,

generation_config=genai.GenerationConfig(

temperature=0.7,

max_output_tokens=64000,

),

safety_settings=[

{

"category": "HARM_CATEGORY_HARASSMENT",

"threshold": "BLOCK_NONE",

},

{

"category": "HARM_CATEGORY_HATE_SPEECH",

"threshold": "BLOCK_NONE",

},

{

"category": "HARM_CATEGORY_SEXUALLY_EXPLICIT",

"threshold": "BLOCK_NONE",

},

{

"category": "HARM_CATEGORY_DANGEROUS_CONTENT",

"threshold": "BLOCK_NONE",

},

]

)

except ImportError:

raise ImportError("Google Generative AI package not found. Please install it using: pip install google-generativeai")

def normalize_regex_pattern(pattern: str) -> str:

"""Normalize regex pattern to handle both [\s\S]*? and [^]* style patterns."""

if not pattern:

return pattern

if '[^]*' in pattern:

pattern = pattern.replace('[^]*', '[\s\S]*?')

elif '.*?' in pattern and not any(p in pattern for p in ['[\s\S]*?', '[^]*?']):

pattern = pattern.replace('.*?', '[^]*?')

return pattern

def parse_input(input_str: str) -> Tuple[str, Optional[str], Optional[str]]:

"""Parse input string to extract filename, pattern, and question."""

try:

# Handle both raw string format and regular format

input_str = input_str.strip()

# Clean up raw string artifacts from JSON mapping

if input_str.startswith('r"""'):

input_str = input_str.replace('r"""', '', 1).strip()

if input_str.endswith('"""'):

input_str = input_str[:-3].strip()

# Now handle the actual quoted filename

quoted_filename_match = re.match(r"^'([^']+)'\s*(.*)$", input_str)

if not quoted_filename_match:

raise ValueError("Filename must be wrapped in single quotes")

filename = quoted_filename_match.group(1).strip()

remaining = quoted_filename_match.group(2)

# Process pattern and question

if '`' in remaining:

pattern_parts = remaining.split('`')

pattern = pattern_parts[1]

pattern = normalize_regex_pattern(pattern)

question = '`'.join(pattern_parts[2:]).strip()

else:

pattern = None

question = remaining.strip()

return filename, pattern, question

except Exception as e:

print(f"Input parsing error: {str(e)}")

raise

def find_file(base_path: str, filename: str) -> Optional[str]:

"""Find file recursively in the base directory and return its full path."""

filename_with_ext = f"{filename}.md"

for root, _, files in os.walk(base_path):

if filename_with_ext in files:

return os.path.join(root, filename_with_ext)

return None

def read_file_content(filename: str, pattern: Optional[str] = None) -> str:

"""Read file content with optional regex pattern filtering."""

try:

filepath = find_file(BASE_PATH, filename)

if not filepath:

raise FileNotFoundError(f"File '{filename}.md' not found in your vault directory or its subdirectories.")

with open(filepath, 'r', encoding='utf-8') as f:

content = f.read()

if pattern:

try:

match = re.search(pattern, content, re.DOTALL)

if match:

return match.group(0)

else:

raise ValueError(f"Pattern '{pattern}' not found in file content.")

except re.error as e:

raise ValueError(f"Invalid regex pattern: {str(e)}")

return content

except Exception as e:

raise Exception(f"Error reading file: {str(e)}")

def groq_chat_with_files(input_str: str, system: Optional[str] = DEFAULT_SYSTEM_INSTRUCTION,

save_context: bool = True, model: str = 'llama-3.3-70b-versatile') -> None:

"""Chat with file content using Groq."""

global __chat_messages

try:

filename, pattern, question = parse_input(input_str)

content = read_file_content(filename, pattern)

client = create_groq_client()

msg = []

if system:

msg.append({"role": "system", "content": system})

if save_context:

msg += __chat_messages

context_msg = f"Content from file '{filename}':\n\n{content}\n\n"

if question:

context_msg += f"Question: {question}"

msg.append({"role": "user", "content": context_msg})

try:

completion = client.chat.completions.create(model=model, messages=msg)

response = completion.choices[0].message.content

if save_context:

__chat_messages += [

{"role": "user", "content": context_msg},

{"role": "assistant", "content": response}

]

print(response + '\n')

except Exception as e:

if save_context and len(__chat_messages) > 2:

del __chat_messages[-2:]

return groq_chat_with_files(input_str, system, save_context, model)

else:

print(f"Chat API error: {str(e)}")

except Exception as e:

print(f"Error: {str(e)}")

def gemini_chat_with_files(input_str: str, system: Optional[str] = DEFAULT_SYSTEM_INSTRUCTION,

save_context: bool = True, model: str = "gemini-2.0-flash-thinking-exp") -> None:

"""Chat with file content using Google's Generative AI (Gemini)."""

global __chat_messages

try:

filename, pattern, question = parse_input(input_str)

content = read_file_content(filename, pattern)

# Create Gemini model instance

gemini_model = create_gemini_client(model)

# Prepare the prompt

prompt_parts = []

if system:

prompt_parts.append(system + "\n\n")

if save_context and __chat_messages:

# Convert previous messages to a format Gemini can understand

history = "\n\n".join([f"{'User' if msg['role'] == 'user' else 'Assistant'}: {msg['content']}"

for msg in __chat_messages])

prompt_parts.append(history + "\n\n")

context_msg = f"Content from file '{filename}':\n\n{content}\n\n"

if question:

context_msg += f"Question: {question}"

prompt_parts.append(context_msg)

try:

# Generate response using Gemini

response = gemini_model.generate_content("".join(prompt_parts))

if save_context:

__chat_messages += [

{"role": "user", "content": context_msg},

{"role": "assistant", "content": response.text}

]

print(response.text + '\n')

except Exception as e:

if save_context and len(__chat_messages) > 2:

del __chat_messages[-2:]

return gemini_chat_with_files(input_str, system, save_context, model)

else:

print(f"Chat API error: {str(e)}")

except Exception as e:

print(f"Error: {str(e)}")

def clean_chat() -> None:

"""Clean chat history."""

global __chat_messages

__chat_messages = []

The above script is untested as I had to remove multiple lines relating to my own use case, languages, etc.

But it should work and if it doesn’t, take it to AI to customize it.

Guide is written by AI too.

Tip: Currently set Groq model is stupid. Need a better one than that if Gemini is overloaded.

Tip 2: Create a Templater script that you can call while in a file so it will populate the currentFile’s basename so you don’t have to write it in each time:

<%*

const currentFile = app.workspace.getActiveFile();

tR += "@gemini_chat_with_files " + `'${currentFile.basename}'` + " \`start[\\s\\S]*?end\`" + " add_question_here";

_%>

Or just this, if you don’t want to use regex to match sections from large documents:

<%*

const currentFile = app.workspace.getActiveFile();

tR += "@gemini_chat_with_files " + `'${currentFile.basename}'` + " add_question_here";

_%>

- Filename expects single quotes around it, as you can see.

- You call the Gemini function of the script with

@gemini_chat_with_files so you need to add to the config of the plugin @gemini_chat_with_files -> gemini_chat_with_files(r\"\"\"##param##\"\"\") as per the dev’s guide, and the same for groq_chat_with_files function, or any other you cook up in other scripts.

It is these function names in Python scripts that you call.

Obsidian AI Interactivity Script Guide

This script allows you to use Groq and Gemini AI models to analyze and interact with your Obsidian notes directly through the Interactivity Plugin. You can query entire files or specific sections within them using regular expressions.

Setup Instructions

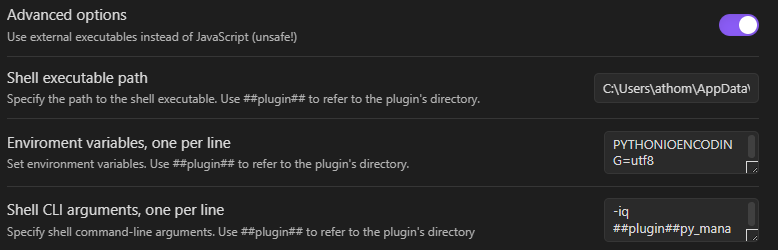

- Install the Interactivity plugin in Obsidian

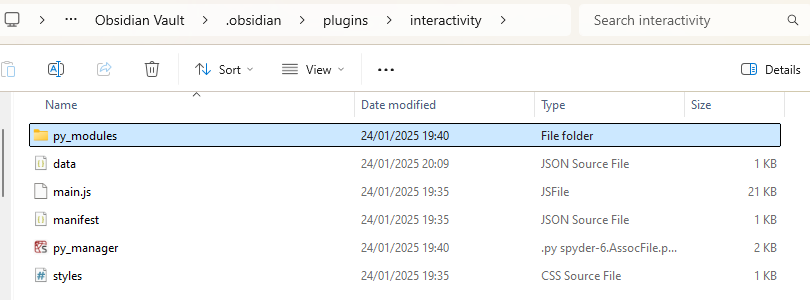

- Copy the

chat_with_gemini_and_groq.py file to your scripts folder for the Interactivity plugin

- Update the following in the script:

- Set your Obsidian vault path for Windows, macOS, and Linux

- Add your Groq API key (get one from groq.com)

- Add your Gemini API key (get one from Google AI Studio)

- Install required Python packages:

pip install openai google-generativeai

Using the Script

The script provides two main functions that you can use within Obsidian’s Interactivity plugin:

1. Using Groq

Basic syntax:

@groq_chat_with_files 'Filename' Your question here

With section selection using regex:

@groq_chat_with_files 'Filename' `regex_pattern` Your question here

- Regex example:

start[\s\S]*?end

2. Using Gemini

Basic syntax:

@gemini_chat_with_files 'Filename' Your question here

With section selection using regex:

@gemini_chat_with_files 'Filename' `regex_pattern` Your question here

- Regex pattern for sections again:

start[\s\S]*?end, e.g. The boss said[\s\S]*?not happy about it\.

3. Clear Chat History

To clear the conversation context:

@clean_chat

Examples

Example 1: Ask about an entire file

@groq_chat_with_files 'Project Ideas' Summarize the main project ideas in this file

Example 2: Ask about a specific section

@gemini_chat_with_files 'Research Notes' `## Literature Review[\s\S]*?##` What are the key insights from the literature review?

Regular Expression Tips

- Use

[\s\S]*? to match any content (including newlines) between two markers

- For sections in Obsidian, you can match between headers with:

## Header1[\s\S]*?##

- If you want to match from a header to the end of the file:

## Last Section[\s\S]*

Customizing AI Models

Changing Groq Models

You can change the Groq model by modifying the default parameter in the groq_chat_with_files function:

def groq_chat_with_files(input_str: str, system: Optional[str] = DEFAULT_SYSTEM_INSTRUCTION,

save_context: bool = True, model: str = 'llama-3.3-70b-versatile') -> None:

Available Groq models include:

llama-3.3-70b-versatilellama-3.1-8b-instantgemma-2-27b-itmixtral-8x7b-32768

Changing Gemini Models

You can change the Gemini model by modifying the default parameter in the gemini_chat_with_files function:

def gemini_chat_with_files(input_str: str, system: Optional[str] = DEFAULT_SYSTEM_INSTRUCTION,

save_context: bool = True, model: str = "gemini-2.0-flash-thinking-exp") -> None:

Available Gemini models include:

gemini-2.0-flash-thinking-expgemini-2.0-flash-thinking-exp-01-21gemini-2.0-pro-latestgemini-1.5-progemini-1.5-flash

Customizing System Instructions

You can modify the DEFAULT_SYSTEM_INSTRUCTION variable to change how the AI responds:

E.g. a different prompt would be:

DEFAULT_SYSTEM_INSTRUCTION = """Use markdown but do NOT apply markdown code blocks. Do NOT use emojis."""

Troubleshooting

-

File not found error: Make sure your base path is correctly set for your operating system (Windows, macOS, or Linux) and that the file exists within your vault. On macOS, remember that the system name is “Darwin”, not “MacOS” in Python’s platform detection.

-

API errors: Verify your API keys are correct and have sufficient credits/quota.

-

Module not found errors: Install the required packages with pip:

pip install openai google-generativeai

-

Regex pattern not found: Test your regex patterns separately to make sure they match the content you’re looking for.

Staying Updated

AI models are frequently updated. If a model becomes deprecated:

-

Check the provider’s documentation for the latest model names:

-

Update the default model parameter in the relevant function